Google crawl rate is what determines how fast your website updates show in the Google search results.

Most website owners would prefer that their pages show up in Google as soon as they are made. After all, this is the first step to ensuring you have a fighting chance in ranking.

In order for your website to show up in Google it needs to be crawled. Without Google crawling your website it is invisible from the search engine results page. The more often Google crawls your website, the more quickly your new content will appear in the search results and that is the sole aim of investing in Search Engine Optimization in the first place, right?

What is the Google Crawler?

Google’s crawler is a program designed to find new websites on the internet as well as detect changes to websites. It does this by following links on websites and virtually crawling the internet. Google performs crawling and indexing of websites regularly.

If your website is updated more frequently Google’s crawler will check it more regularly to ensure it stays up to date with any changes. The more frequently Google crawls your site the more likely Google will know that you have something new to rank. Examples of sites that usually get crawled often would be news outlets, community sites (forums) and blogs.

When crawlers come to your website, they follow links to other pages also on your website and gather information to display in the search results but you can make it even easier for Google crawlers to navigate your site by implementing a few things:

Create a sitemap:

Sitemaps can be created to help your Google crawlers easily find every page on your site.

Eliminate all 404 errors:

404 Errors are created when a link on your site goes to a page that does not exist. Google does not like when their bots waste time navigating to broken links and this can cause a delay in the time it takes Google to crawl your site. It also creates a bad user experience so you should never have 404 errors on your site.

Remove duplicate content:

Using duplicate content throughout your site is not recommended and could cause the creation of unnecessary pages that Google will need to spend time crawling. Having duplicate content can also negatively affect the position of your site in the rankings.

Why Should You Care About Google Crawl Frequency?

The more frequently Google crawls your site, the faster you will see your website changes reflected in Google. Everyday that you are not ranking in Google’s results is another missed opportunity. This is especially important for time sensitive businesses or during time sensitive holiday sales. For example if I was a real estate agent I would want all my new real estate listings to show up on Google as soon as they are posted since I would be trying to sell the property as soon as possible.

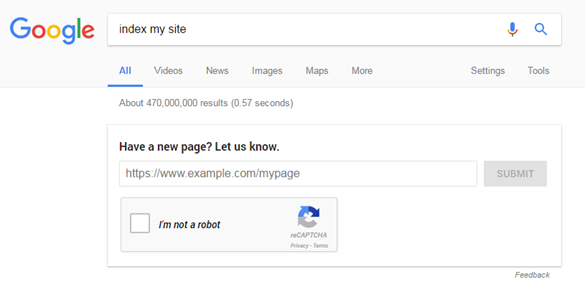

How to index website on Google

Many SEO companies advertise “indexing” as a service, although it is incredibly easy to do on your own. When you index your website on Google, you are simply asking Google to crawl your site and add it into the results. Many inexperienced website owners complain about not showing up on Google at all because they have never been crawled. The fastest way to submit your website to Google is to simply search for “index my site” and submit your URL instantly.

Sitemap submission

Add your site to Google Search Console and Bing webmaster tools with a sitemap. Submitting a sitemap is not a very time-consuming task and can be done easily but before you can submit a sitemap to Google you need to create one.

If you have a WordPress website, you can use the Yoast SEO plugin to generate a sitemap.

If you use other CMS platforms, check the functions or try to search Google to see if they include sitemaps as a feature you can also try checking your site and adding /sitemap.xml to the end of the url to see if one was automatically generated.

If you are not using a CMS for your website and it was coded from scratch the easiest way to create a sitemap is to use a sitemap generator and then simply place the sitemap into the root of your website.

Sitemap submission should be the very first step for any new website as it makes your website easy to crawl for Google.

Faster & smaller pages

Google loves websites that improve the user experience, one of the easiest ways to create a good user experience is by having a fast load time. Having faster load times will also help with your websites conversion rate. Google claims that people start leaving websites when they take longer than 3 seconds to load.

Not only is fast load time good for users but it’s also good for Google’s crawler. If your website takes a long time to load pages, then you are going to face trouble in getting the site crawled efficiently. Reduce your page size and remove unwanted plugins to improve pagespeed.

You can use the Google Pagespeed Insight tool to gather more information on how you can speed up your website. Although please keep in mind that the score this tool provides is not a reflection of the time that it takes to load your site, but instead a guide on what can be done to improve the current load time. This is a common misconception, but even many large and efficient websites get a bad score with this tool because they can be further optimized.

Good Hosting

Having a hosting with good uptime is another way to increase Google’s crawl rate. A good server delivers website pages faster and makes it possible for Google to crawl your site faster. Having a good server uptime is also important, if your site is not live often than Google will be more hesitant of showing it to users. A site that doesn’t load is a bad user experience.

Interlink Website Pages

I would always recommend interlinking your website pages as this allows you to pass authority between pages. If your site is built on WordPress you can find plugins that will automatically interlink your content. Interlinking also helps to provide a better user experience as people will easily be able to find more information on topics.

What Is Robots.txt?

Robots.txt is a file that you can put in the root of your website to prevent Google crawling and indexing certain pages. Common pages that most webmasters block in the robots.txt include pages like the administrator login page, private folders, unfinished pages and backups. Important files can be hidden from search engine crawl bots with the robots.txt file. Alternatively you can also add a no index and no follow tag to these pages using the Yoast SEO plugin.

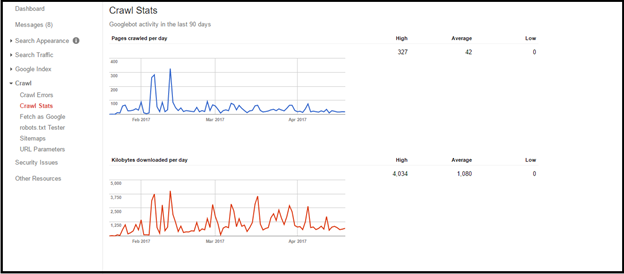

How to Monitor Crawl Rate with Google Search Console?

Once you have successfully applied all the above-mentioned tips to your website, it is the time to monitor your website’s crawl rate with Google Search Console. Monitoring your crawl rate will ensure that your website is staying consistently up to date in the search results.

Before you start using Google Search Console you will first need to verify your ownership of the domain. After doing that follow the steps below:

Visit Dashboard >> Crawl >> Crawl Stats.

The crawl stat area gives you all the information you would need regarding the number of pages crawled, data downloaded per day by Google bots, and the average time spent downloading each page.

If the number of pages crawled decreases and time spent on downloading increases, you need to check the website for errors. Your website might have poorly compressed images, server problems or bloated code that could be hurting Google’s ability to crawl your site.

Crawl Stats was totally Unknown to me. Thanks for sharing.

Glad you learned something new, it’s not too common to see posts on the Google crawler but knowing this kind of information can give you an edge in your seo campaign.

Please is is it possible I get google to crawl my site on certain Keywords when typed on search engine?